MindDrawPlay

from Leipzig & Rostov with Love :)

MindDrawPlay – is a research project of interactive educational

audio-visual art, representing translation of brain waves and heart beat to visual and sound spaces, flows and controls.

It has been grown on a base of research work in the direction of

Brain-Computer Interfaces and heart activity analysis. The project integrates several applications and biosignals recording devices.

The story

MindDrawPlay has started in 2017 as a hobby project during years of my PhD in computational neuroscience in Max Planck Institute for Human Cognitive and Brain Sciences in Leipzig.

I couldn't imagine how far and deep it will go in my life, when I got MindWave mobile EEG device just to play, and was curious - how can I use it in an artistic way, connecting brain waves patterns to sound samples and drawing.

Motivation

The main idea is - to represent an inner state of a person

in dynamic interactive ways with audio-visual biogenerative and AI models,

to analyze brain and heart responses and to adjust the interaction between.

The key aspects:

1. Integrative

- utilization of both brain and heart activity signals and metrics

- the base module (NeuroMembrane interface) can be combined with

a variety of media (generative, neuroart, VJing, video streams, motion sensor)

- support of different neurointerfaces (BrainBit, Neiry, Muse, MindWave)

2. Biogenerative

- immersive visuals for biofeedback experience based on novel generative

and neuroart models - enhance involvement in the interaction process

3. Neuroadaptive

- adjustment of model parameters based on changes in person mental state:

different levels for Attention estimation provides with more interactivity -

more options for model tunning, higher sensitivity and dynamics of translation

The main directions:

technical and artistic

1) to process the brain and the heart activity with mobile devices

2) to extract valuable quantitative metrics of mental and emotional states

3) to create audio-visual real-time models incorporating obtained metrics

4) to adjust parameters of the models according to changes of the state

5) to analyze recorded biosignals data to study biofeedback experience

social and media

6) to exhibit the project and collect feedback from different people

7) to make the project available as open-source / low-cost product

8) to bring mobile neurotechnology from labs and industry to daily experience

9) to expand communication and expression forms by biosignals augmentation

ontological

10) to learn more about the brain and the heart, to explore new tools and the mindspace

example of brain and heart

reactions on music: Kugo-Yeya

Current state

MindDrawPlay integrates audio-visual translation in forms of biogenerative models

and neuroart, provides with a unique experience of immersion and interaction with

brain and heart activity, includes options for analysis of the recorded biosignals.

One of the project's key components is NeuroMembrane module, which can be considered as a universal interface for biosignals. Thanks to TouchDesigner's integrative approach to modules, it can be easily extended to a wider range of metrics and projects, and used with other applications by streaming biosignals (as a separate visual layer via Spout)

and its quantitative metrics (via OSC protocol).

Current options of MindDrawPlay usage and examples can be split into several directions:

1) biogenerative flows controlled by Attention / Meditation / brain waves expressions

2) animations based on neuroart images, audio-visual books,

NFT collections: Revelations of Dreams, MindMembrane

3) real-time biogenerative neuroart (integration with StreamDiffusion)

4) VJing flows (using external music and multi-layers geometries)

5) brain and heart reactions on external music

6) meditation sessions with biosignals recording

7) live performances as VJ or with with dancing artists

8) demonstrations of the project

MindDrawPlay is currently available for personal usage or small group demonstrations and supports a limited number of biosignals recording devices. The plan is to make the main part of project's functionality available to anyone with any neurointerface, so the people can have and share vibrant and profound biofeedback experience.

Overview and examples

brief overview of the project

with BrainBit (EEG) + Callibri (ECG)

Biogenerative Sphere with adaptive Attention levels, Statistics

Integration with neuroart:

neuroadaptive animation flow,

Revelation of Dreams collection

Biogenerative Brain (as points cloud)

Integration with Kinect:

motion sensor based particles

modulated by Attention level

MindTube: Attention

modulation on particles flow

MindTunnel

(biogenerative audio spectra flow)

Statistics module updates

Biogenerative Cubes with adaptive adjustment of Attention estimation

Biogenerative Neuron (particlesGPU)

Brain & heart responses on music

with adaptive Attention modulation

on audio spectra visualization

Meditation with biosignals recording

MindGeometry: adaptive adjustment of base geometries

for instancing of the structure

Integration with neuroart:

Attention modulates transitions

between images, Revelations #143

About multi-user neurofeedback

(prototype with BrainBit and

BrainLinkLite devices)

Biosignals Augmentation

(gaming case, Everspace2)

3 examples of biogenerative visuals,

markers and Statistics module

Biogenerative 3D Physarum

Integration with neuroart:

neurointeractive images change,

MindMembrane collection

VJing with biosignals

MindCyclone: brain and heart

modulations on particles flow

Integration with neuroart:

Attention controls parameters

of real-time StreamDiffusion

MindRays

(biogenerative neuroadaptive)

Biosignals Augmentation

(daily snow story)

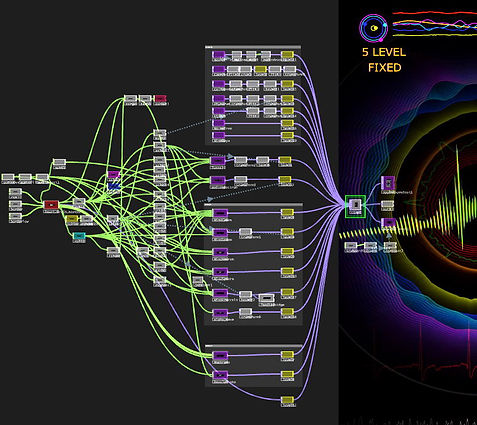

NeuroMembrane as base universal module for biosignals integration:

TouchDesigner network with audio spectrum visualization

early MindDrawPlay application with brain waves streaming

to TouchDesigner vis OSC protocol (collaged with 3D brain from Resolume)

MindDrawPlay (2025, v.3.0)

Bands Utility (processing of EEG/PPG signals)

Implementation

The project includes several Qt / C++ applications dedicated to obtaining and processing of brain waves (EEG) and heart beat (ECG/PPG) signals from different mobile neurointerfaces (BrainBit / NeiryHeadband / Muse2) and mobile cardiodevice Callibri.

Core part of mathematics related to the signal processing as well as an audio translations of biosignals are also performed in these applications. The acquired biosignals and extracted metrics are then streamed to TouchDesigner (TD) via OSC protocol.

QRS Utility (processing of ECG signals)

Definitions: audio-visual translation of biosignals

Audio-visual translation of biosignals means video, where sound and visuals have direct connection to biological signals recorded from a person in forms of electrical activity. Such video is an interactive to person inner state - the presented objects are changing according to changes in the brain and heart activity. Therefore, a biofeedback relation is established and presented in audio-visual form.

Definitions: biosignals

Biosignals are brain waves and heart beat, which are recorded from a person via special devices:

- Brain waves in form of Electroencephalogram (EEG) - Heart beat in form of Electrocardiogram (ECG)

Biosignals Metrics and its utilization

There are metrics derived from the brain and the heart activity based on EEG and ECG/PPG signals.

These metrics are used in two ways for creation of audio-visual translations:

1) biofeedback parameters for the modulation of generative visual models and neuroart animation

2) biofeedback parameters for the modulation of an audio translation module

For the brain activity, besides, the relative expression of different bands (Theta, Alpha, Beta, Gamma, %),

there are also mental levels (Attention, Meditation, Cognitive Control, Cognitive Load, %).

All these metrics reflect mental state of a person - of being more or less focused, cognitively loaded or calm. There is no one established way for estimation of Attention and Meditation levels, it can vary for different fields and application. Overall, Beta waves is clearly associated with Attention, whereas Alpha and Theta waves – with Meditation, but other waves also have a significant impact on the estimation of these mental levels and states. Cognitive Control is a metric based on relation of Beta/Theta waves, whereas Cognitive Load is based on Theta/Alpha relation.

For the heart activity, there are currently 2 metrics - Heart Rate and Pressure Index.

Heart Rate estimation is based on detection of QRS complexes from ECG signals,

which is implemented with a modification of the Pan–Tompkins algorithm.

Pressure Index is a specific metric (also known as Baevsky's stress index),

which is based on the analysis of heart rate variability.

Audio translation module

There are 6 sound samples in Bands Utility playing in loop for all related brain activity metrics.

For Attention related sound - its pitch is changing accordingly to changes of Attention level.

When you are more focused - the tone becomes higher, and when more relaxed - the tone is lower.

For other sounds - their volume is changing in direct relation to levels. Additionally, there are inner parameters to amplify volume of Gamma (which usually has very low level) and to decrease

volume of Alpha and Beta.

For the heart beat - on each detected beat from ECG signal in QRS Utility - a sound of heart beat plays.

Every audio translation reflects brain and heart state and its responses on visuals during recording.

BrainBit mobile neurointerface

Callibri multi-modal biodevice

Artifacts processing

The substantial update in MDP v.3.0 is in improvement of the signal processing part due to implementation of artifacts correction with rASR (Riemannian Modification of Artifact Subspace Reconstruction) approach. This technique allows to eliminate efficiently short artifacted intervals by interpolation based on signal properties and, therefore, provides with more robust and better quality signal.

Below is a demonstration how it works with BrainBit neurointerface.

Statistics module

Statistics module provides with several ways for analysis of recorded biosignals,

the key aspect is an option to see both the brain and the heart metrics changes for choosen time period.

Besides, there is an option for time markers and streaming of them directly from TouchDesigner.

Integration with TouchDesigner (TD)

The main visual and interface parts are implemented inside TD projects.

TD serves as a base environment for variety of biogenerative translations

and integration of the biosignals with neuroart (Stable Diffusion images and animation).

The core visual representation module in TD is NeuroMembrane.

NeuroMembrane - visual representation of biosignals (brain and heart activity) metrics in form of animated circular plots, which show dynamics of metrics changes for specific time interval (usually 1 minute). NeuroMembrane emphasizes the most expressed brain wave at the moment by visual trace effects. Membrane part is used in the title, because the circular plots response like membrane to the changes in brain waves expression levels - stretch and compress. Examples: Gamma (yellow) on the right image,

Theta (cyan) on the video on center (high Theta flow) with additional particles animation effect.

NeuroMembrane is the base universal module for biosignals integration.

Besides visualization part it also includes an adaptive Attention estimation mechanism.

Overview of TouchDesinger networks

The current version of the main TD network and some internal modules are presented below.

the main network

NeuroMembrane network

NeuroMembrane network:

Theta, Alpha, Beta, Gamma, Heart Rate, Pressure Index visualization

MindScapes network

MindGeometry network

MindNeuron network

MindSphere network

v.2.0 (2023)

This video is an overview of 2nd version of MindDrawPlay.

At that time, the project started to include several

Qt / C++ applications dedicated to processing of both brain waves (EEG) and heart beat (ECG) signals from BrainBit and Callibri devices. Most of mathematics as well as audio translations of biosignals were done in these applications. The key change was made by incorporating TouchDesigner as the main visual interface combined with biogenerative animations implemented there.

Audio translation

There are 2 sound samples playing in loops for Attention and Meditation, Attention level modulates pitch of the sample, Meditation level - volume of the sample, for Heart Beat there is a single play of corresponded sound sample on each detected beat.

v.1.0 (2017-2020)

This video is an overview of 1st version of MindDrawPlay made as a single Qt / C++ application obtaining EEG signals from MindWave / MyndPlay / BrainLink Lite devices.

This application is not much in usage (only some modules).

Combining technology advances – such as mobile EEG devices and musical knowledge – such as pentatonic scales (tones from hang and tank drums), it allows to see, to hear the brain activity represented by set of sounds and to use brain waves as a brush for drawing, as parameters for image filtering, attention modulated pictures flows, in “puzzle gathering”, “find the same” and “go through” games.

Examples

[ update in progress ]

Attention modulates size and surface distortion of the globe.

Physarum generative particles model: Attention level modulates speed and length of particles movement, Meditation - angle of the movement.

Attention and Meditation levels modulate brush size, brush style (blurring degree) and speed of the canvas rotation.

These examples based on Nvidia Flex particles model.

MindFlex 1: Attention level modulates cohesion parameter of the particles movement.

MindFlex 2 & 3: Attention level modulates border area for the particles movement, several other parameters are linked with midi controller.

based on ParticlesGPU TouchDesigner node: Attention level modulates through sound spectrum scaling parameter size of the particles, Meditation level - Drag parameter ~ particles spread.

based on ParticlesGPU TD node: Attention level

modulates speed of the particles movement.

Brain activity and sound control both structure and coloring of the form: audio level modulates amplitude of waves, Attention modulates size of particles and waves period, bloom intensity level, audio reactivity delay, Meditation - glow level, music: Suduaya

Heart Brain Dance

Audio-visual translation of brain waves and heart beat in a dancing performance. The music of heart and brain is changing depending on dance movements and Attention level, projections of heart and brain images and waves are animated on heart beats.

Collaboration with DomTanzTheater:

choreography: Tamara Ryazantseva,

brain: Alisa wearing neurointerface,

heart: Pavel wearing cardiodevice.

Attention and Meditation levels modulate landscapes structure, sound level - saturation of landscape color.

Attention level modulates structure,

Meditation and sound levels - colors.

Attention and sound levels modulate

amplitude of generative waves.

Modification: Audio and Attention reactive particles: sound and Attention levels modulate size of particles and camera changes, Attention modulates area of particles, its traces length and spread of particles, music: Klaada

Based on Particles node in TD: Attention modulates Axial force and Twist strength, Meditation - Vortex force