new way of storytelling

based on integration of biosignals

with human-AI communication

MindMembrane

MindMembrane is 2nd audio-visual book with biosignals - brain waves and heart beat translations.

The collection is a part of MindDrawPlay project,

which emerged on the base of Art&Science integration

as a result of years of research and inspirations.

Every work in the collection contains 2 storylines.

1st - interactive, neurofeedback driven visual flow based on specifically chosen set of stable diffusion images.

2nd - visualization of brain waves and heart beat in form of

NeuroMembrane and translation of biosignals to sounds.

Attention, Meditation, Theta, Alpha, Beta, Gamma levels are estimated in real-time during recording from EEG signal and linked to sound samples. For Attention related sound its pitch is changing accordingly to changes of Attention level, for other sounds - their volume is changing. Heart beat is detected from ECG signal and also has related sound.

Every audio translation reflects brain and heart state and its responses on images in the flow.

There are 2 key aspects of the interactive visual flow:

1st - change of images by Attention level and related balls.

2nd - opposite expression for the image and NeuroMembrabe visualization in relation to balls number.

Therefore, you see the visual flow driven by Attention level and hear a music of brain and heart recorded during the flow. Each item in the collection is a unique story with biodigital signature of the moment in the mindspace.

It reveals and demonstrates general global integration process of our natural waves and its expansions with

so-called "artificial intelligence".

Items of the collection

videos are available on YouTube and NFT pages

How does it work?

The main idea of the collection is audio-visual translation of biosignals with stable diffusion art.

Let's start from short definitions of all terms and further explain in more details how it all is integrated.

1) Audio-visual translation of biosignals means video, where sound and visuals have direct connection with biological electrical signals from a person.

Specifically, in this collection such translation is visually implemented in form of NeuroMembrane (see video

with explanation on the right and description below).

2) Biosignals are brain waves and heart beat,

which are recorded from a person via special devices:

- Brain waves in form of Electroencephalogram (EEG)

recorded with mobile neurointerface (for example, BrainBit)

- Heart beat in form of Electrocardiogram (ECG)

recorded with mobile cardio device (for example, Callibri)

3) Stable Diffusion (SD) art - images generated

by neural networks from text prompts. Most of the prompts for images are created using Gemini language neural network, and AIVision, Flux (dev, schnell) SD models.

4) NeuroMembrane - visual representation of biosignals (brain and heart activity) metrics in form of animated circular plots, which show dynamics of metrics changes for specific time interval (usually 1 minute).

NeuroMembrane emphasizes the most expressed brain wave at the current moment by additional visual trace effect from circular plot of the corresponding wave.

Technical Implementation

General Scheme

Attention, Meditation, brain waves levels estimation and audio translation.

Bands Utility (C++, Qt) is used to receive signals from neurointerface device (supports BrainBit, NeiryHeadband, Muse2) via Bluetooth and process it - apply filters, remove artifacts with rASR correction, perform signal decomposition (FFT) to extract values of specific frequency ranges - Theta, Alpha, Beta, Gamma.

What is Attention and Meditation?

These metrics reflect mental state of a person - of being more or less focused, excited or calm. There is no one established way for estimation of Attention and Meditation levels, the exact methods can vary for different fields of application.

Basically, Beta waves level is clearly associated with Attention, and Alpha waves – with Meditation, however, other waves also have a significant impact on mental states.

Here, estimation of both metrics is based on relative expression of Alpha and Beta waves. Obtained inside the utility filterd and artifacts corrected EEG signal and 6 levels are then transmitted to TouchDesigner via OSC protocol, where it is visualized and overlayed with NeuroMembrane and Stable Diffusion images.

What is Brain Waves?

image: diygenius.com/the-5-types-of-brain-waves

How does audio translation work?

There are 6 sound samples inside utility playing in loop for all related metrics.

For Attention related sound - its pitch is changing accordingly to changes of Attention level.

When you are more focused - the tone becomes higher, and when more relaxed - the tone is lower.

For other sounds - their volume is changing in direct relation to levels. Additionally, there are some inner parameters to amplify volume of Gamma (which usually has very low level) and to decrease volume of Alpha and Beta.

For Heart beat - on each detected beat from ECG signal - sound of heart plays.

Every audio translation reflects brain and heart state and its responses on images during recording.

Heart beat detection and Pressure Index.

HeartBeat Utility (C++, Qt) is used to receive signals from cardio device (via Bluetooth) and process it - detect heart beat peaks and estimate Heart rate and Pressure Index.

Heart beat detection is based on modification of the Pan–Tompkins algorithm.

Pressure Index is a specific metric (Baevsky's stress index), which is based on analysis of Heart rate variability. Currently, it does not have audio translation in my model, just visual representation of the value. However, I consider it valuable to have, because it gives more detailed description of Heart functioning process than just Heart rate value.

Obtained inside the utility filtered ECG signal, Heart rate value and Pressure Index are then transmitted to TouchDesigner via OSC protocol, where it is visualized and overlayed with NeuroMembrane and Stable Diffusion images.

image: Explode / Shutterstock.com

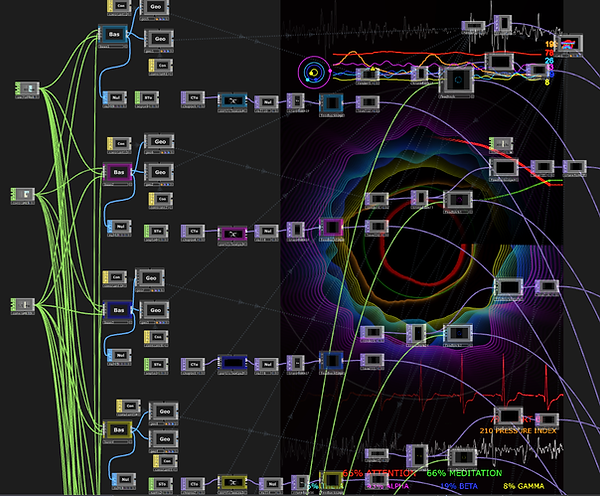

TouchDesigner (integration of all parts)

All obtained biosignals, their metrics and visual animation flow with Stable Diffusion images are combined in one TouchDesigner project.

NeuroMembrane module: Membrane part is named, because the circular plots response like membrane to the

changes in brain waves expression levels - stretch and compress (for example, yellow - Gamma on the right plots).

OSC receive part, where biosignals and its metrics are obtained from utilities

circular plots visualization part, where Theta, Alpha, Beta, Gamma waves expression levels and Heart Beat, Pressure Index dynamics are represented,

not all nodes are in use, some - more like experimental options

Tunnel: additional flow, which opacity and speed of movement are modulated by Attention level

Special cases

The work on collection has some experimental, research aspects, therefore, there are some variations in NeuroMembrane and visual representations.

For example, in Items 001-005 - there are additional particles elements for each of 4 brain waves, which appear from corresponging circular plots and go to the center. The number of particles are related with brain waves expression level, thus, with a higher Alpha - will be more violet particles.

Later, this option is not used, because it makes visual space too dense.

In Item 011 and 031 - there are different transitions.

011 - in way of slide show changes, each second new image.

031 - particlesGPU based transitions.

Item 001: additional particles effect

Item 011: slide show transitions

Item 031: particlesGPU transitions

Opposite expression and randomness in transitions

There are 2 key features of the collection,

which are directly related with changes of images (transitions).

1st is an opposite expression for NeuroMembrane visualization and for Stable Diffusion images.

It works according to the balls accounting mechanism, balls are increasing, when Attention level

is higher than 80% (in 1st items 60%) and decreasing when it's below 60% (in 1st items 40%). When

the ball number reaches 200 - there is switch to the next image, the switch is always in random order.

The number of balls modulates opacity of NeuroMembrane and SD image in an opposite way:

with more balls - the image is more visible and NeuroMembrane less.

How fast the balls grow - is directly related to Attention, to your mental state.

In Bands Utility there is an option to adjust Attention estimation coefficient,

which allows you to control how difficult it will be - to focus on higher levels,

and, thus, to have slower or faster changes of the images.

Therefore, you have an interactive neurofeedback drive audio-visual flow / movie.

2nd - randomness as it is and how your brain makes connections between images.

Currently, there are 1111 source space images, which gives a huge total number of possible different transitions, especially, for 1 minute story, where usually around 14 transitions.

It can be computed using permutations number:

number of single transitions

number of transitions in 1 minute

MindMembrane stories have huge variety and always different.

When you experience the flow with random changes of images - your brain makes

semantic connections between them, and can response differently to different images,

which you can observe in NeuroMembrane visualization and in how transitions goes.

Each item in the collection is a unique story with biodigital signature of the moment in the mindspace. It reveals and demonstrates general global integration process of our natural waves and its expansions with so-called "artificial intelligence".

The collection is constantly evolving with addition of new SD models, for example,

starting from 045 item - the 1st image in the story is always video generation.

There are many potential updates in biosignals processing,

metrics extraction, audio translations, transition ways.

MindMembrane is 2nd audio-visual book with biosignals and Stable Diffusion.

You can read about the 1st book, see the difference and its stories - Revelations of Dreams.

MindMembrane is the moment, when I clearly realized that what I'm doing and what

I would love to do is not just audio-visual Art&Science, but research and storytelling.

It became a significant part of my life's book. Being audio-visual writer with biosignals translation is that kind of novel and transformative experience that you want to share with the world.

I'm open for any questions and potential collaborations / exhibitions / performances,

will be glad to communicate about my favourite projects or any related topic.